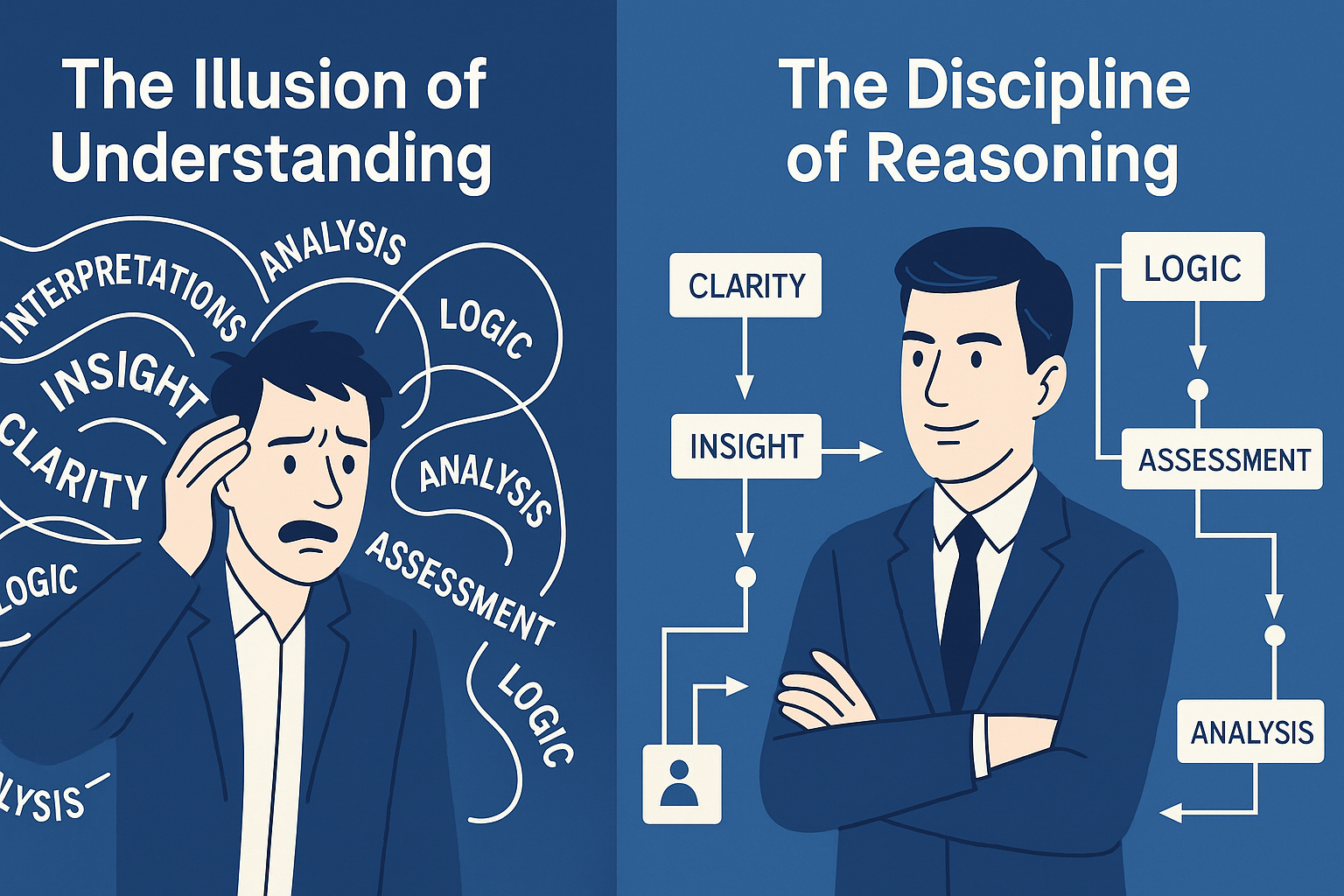

AI adoption is accelerating across every sector. Leaders see the potential to streamline operations, strengthen decision pathways, and improve the performance of their marketing and technology functions. Most teams begin with General Purpose AI (GPI). These systems are designed to work for everyone and every domain. They generate fluent, confident answers, but fluency is not reasoning. GPI is optimized for engagement and perceived clarity, not analytical discipline.

Special Purpose AI (SPI) takes a different approach. SPI is constrained, grounded, and aligned to a defined business domain. It trades broad conversational appeal for accuracy, structure, and dependable reasoning. When the distinction between GPI and SPI is not understood, organizations unintentionally rely on systems that sound correct but may not be grounded in evidence. For businesses that depend on clarity, that gap creates risk. If AI is going to support real decision making, it must operate with explicit constraints, a defined persona, domain knowledge, and purpose fit.

Quick Takeaway: Four Requirements for Getting Real Value from AI

- Define clear constraints for how the AI should think.

- Use a disciplined persona to control behavior.

- Ground the AI in domain knowledge.

- Match the AI’s style to the task purpose.

Definition

- General Purpose AI (GPI): Broad conversational systems not tailored to a specific domain and optimized for perceived clarity and engagement.

- Special Purpose AI (SPI): Constrained, domain grounded systems built to deliver accurate, structured reasoning for defined business functions.

1st: If AI is going to support clear thinking in business, it must operate under explicit constraints. The first requirement is defining how the system should think and not merely how it should sound. This includes specifying tone, analytical posture, error handling, and limits on inference. When constraints are applied, the output becomes more stable and more transparent. It shifts from conversational engagement to structured reasoning.

- GPI Example: Ask GPI for a marketing recommendation and it may produce a confident but speculative answer, filling in assumptions it cannot verify. The result may read well but lacks grounding.

- SPI Example: With explicit constraints, SPI evaluates options using defined criteria, known limits, and approved frameworks. It produces structured reasoning, not stylistic improvisation.

2nd: The creation of a well defined persona. A persona is not a creative voice. It is a behavioral framework that enforces consistency. It restricts the system to predictable modes of analysis and prevents drift into generalized conversational behavior. This gives organizations repeatable, reliable responses that support operational clarity.

- GPI Example: Without a persona, GPI falls into conversational patterns intended to please the user, such as hedging, elaboration, or generating follow-on questions.

- SPI Example: A disciplined persona locks the system into a stable reasoning posture. It avoids drift, maintains consistency, and behaves predictably across tasks.

3rd: The requirement is domain grounding. Businesses operate on specific constraints, real data, and clear definitions. When an AI system is anchored in domain documents, approved frameworks, and explicit terminology, its output becomes verifiable. It stops generating plausible statements based solely on statistical patterns. It retrieves, organizes, and applies knowledge from sources that the organization trusts. This step is central to reducing hallucinations and ensuring that the AI works inside the business’s actual reality.

- GPI Example: When asked about a domain-specific issue, GPI relies on general training patterns. It may produce industry-sounding terms that appear correct but lack relevance.

- SPI Example: SPI references domain documents, internal models, and approved definitions. It retrieves, organizes, and applies information from reliable sources. This eliminates hallucinations and keeps analysis tied to your reality.

4th: The requirement is purpose fit. AI used for creative writing is not suitable for analysis, planning, or client strategy. Applying creative conversational behaviors to analytical work introduces confusion. Smooth language can hide weak reasoning, and the output may appear insightful without the underlying structure needed for real decisions. Business leaders must recognize this distinction to prevent unintentional misuse.

- GPI Example: Use GPI for analysis and it may default to narrative explanations that mask missing logic. Smooth language can hide weak reasoning.

- SPI Example: SPI uses an analytical style tailored to decision support. It emphasizes structure over fluency. It is designed for clarity rather than entertainment.

It is also worth addressing a reasonable objection directly: modern General Purpose AI systems are getting materially better. They reason more clearly, follow instructions more reliably, and require less correction than earlier generations.

This does not invalidate the case for Special Purpose AI. It sharpens it.

Model improvements increase capability, not intent. They improve how well an AI can think, not what it should think about, nor the constraints under which that thinking should occur. Without explicit grounding, boundaries, and posture, even very capable systems will still optimize for plausibility over correctness and fluency over decision quality.

In practice, this means SPI is no longer about compensating for weak models. It is about enforcing discipline. It is about making intent explicit, constraining reasoning to what matters, and ensuring outputs are reliable enough to be used in real business decisions.

As General Purpose AI improves, the cost of building Special Purpose AI drops. The need for it does not.

FAQ

What is the difference between GPI and SPI?

GPI provides fluent but general answers, while SPI uses constraints, domain knowledge, and structure to support accurate decision making.

Why does SPI improve business decisions?

SPI avoids speculation, relies on verified information, and produces reasoning aligned to the organization’s real environment.

References and Notes

Ouyang, L. et al. (2022). Training language models to follow instructions with human feedback. https://arxiv.org/abs/2203.02155

Christiano, P. et al. (2017). Deep reinforcement learning from human preferences. https://arxiv.org/abs/1706.03741

Bender, E. M., Gebru, T., McMillan-Major, A., Shmitchell, S. (2021). On the dangers of stochastic parrots. https://dl.acm.org/doi/10.1145/3442188.3445922

Bommasani, R. et al. (2021). On the opportunities and risks of foundation models. https://arxiv.org/abs/2108.07258

Ji, Z. et al. (2023). A survey on hallucination in large language models. https://arxiv.org/abs/2311.05232

Dang, A.-H., Tran, V., Nguyen, L.-M. (2025). Survey and analysis of hallucinations in large language models. https://www.frontiersin.org/articles/10.3389/frai.2025.1622292/full

Liu, N. F. et al. (2024). Lost in the middle. https://aclanthology.org/2024.tacl-1.9/

Shuster, K. et al. (2023). Towards mitigating hallucination in large language models via self reflection. https://aclanthology.org/2023.findings-emnlp.123.pdf

LiveScience (2025). AI hallucinates more frequently as it gets more advanced. https://www.livescience.com/technology/artificial-intelligence/ai-hallucinates-more-frequently-as-it-gets-more-advanced-is-there-any-way-to-stop-it-from-happening-and-should-we-even-try

Financial Times (2025). The hallucinations that haunt AI. https://www.ft.com/content/7a4e7eae-f004-486a-987f-4a2e4dbd34fb

In this article, the term ‘general-purpose AI’ refers to AI systems functioning today as a general-purpose technology—widely applicable across sectors. It does not refer to the AI research usage of ‘general-purpose AI’ meaning domain-general cognitive systems or AGI.

References and Semantic Distinctions

Table: Distinctions Among Commonly Confused AI Terms

| Term | Domain of Use | Definition | What It Describes | Status Today | Potential Source of Confusion |

| General-Purpose AI (this article, observable use) | Industry, applied AI, business contexts | AI systems that can be applied across many tasks and industries; broadly useful tools | Functionality and applicability of current LLMs and multimodal models | Real and observable | Can be mistaken for cognitive generality when none exists |

| General-Purpose AI (research meaning) | AI research, AGI discussions, policy | AI capable of domain-general reasoning and learning across tasks without retraining | Aspirational cognitive capability; AGI-adjacent | Not yet real; developmental goal | Shares the same phrase as the applied usage but refers to a fundamentally different concept |

| Artificial General Intelligence (AGI) | Research, governance, long-horizon forecasting | Hypothetical AI system with human-level or broader general cognitive competence | Goal of long-term development, not present capability | Not real | Often conflated with “general-purpose AI” in research contexts |

| General-Purpose Technology (GPT) | Economics, innovation theory | Technology that transforms multiple sectors and enables widespread complementary innovation (e.g., electricity, computing) | Structural economic role of transformative technologies | Real and established category | Term “GPT” overlaps with model branding and general-purpose AI language |

| GPT (Generative Pre-trained Transformer) | Machine learning, commercial AI | Transformer-based model architecture trained to predict sequences and generate text (and now multimodal content) | Model family used in ChatGPT, Claude, Gemini, etc. | Real and widely deployed | Acronym matches “General-Purpose Technology,” adding to confusion |

| Special-Purpose AI | Industry, applied engineering | AI designed and tuned for a specific domain or task, often optimized for reliability and accuracy in that scope | Domain-specific tools, RAG systems, custom workflows | Real and rapidly adopted | May be mistaken as less advanced when actually more effective for defined work |

| Frontier AI / Foundation Models | Policy, lab disclosures, standards discussions | Large-scale models trained on broad datasets and capable of performing many tasks with fine-tuning or prompting | Current LLMs and multimodal agents | Real and evolving | Sometimes conflated with both AGI and general-purpose AI despite being distinct |